In contrast to conventional AI fashions that reply to single prompts (like ChatGPT’s primary Q&A mode), AI brokers can plan, motive, and execute multi-step duties by interacting with instruments, information sources, APIs, and even different brokers.

Sounds summary? That’s as a result of it’s. Whereas most would possibly agree with this definition or expectation for what agentic AI can do, it’s so theoretical that many AI brokers out there at present wouldn’t make the grade.

As my colleague Sean Falconer famous just lately, AI brokers are in a “pre-standardization part.” Whereas we would broadly agree on what they ought to or might do, at present’s AI brokers lack the interoperability they’ll have to not simply do one thing, however truly do work that issues.

Take into consideration what number of information programs you or your functions have to entry every day, equivalent to Salesforce, Wiki pages, or different CRMs. If these programs aren’t at the moment built-in or they lack suitable information fashions, you’ve simply added extra work to your schedule (or misplaced time spent ready). With out standardized communication for AI brokers, we’re simply constructing a brand new sort of knowledge silo.

Irrespective of how the trade modifications, having the experience to show the potential of AI analysis into manufacturing programs and enterprise outcomes will set you aside. I’ll break down three open protocols which might be rising within the agent ecosystem and clarify how they might assist you construct helpful AI brokers—i.e., brokers which might be viable, sustainable options for advanced, real-world issues.

The present state of AI agent improvement

Earlier than we get into AI protocols, let’s evaluation a sensible instance. Think about we’re focused on studying extra about enterprise income. We might ask the agent a easy query by utilizing this immediate:

Give me a prediction for Q3 income for our cloud product.

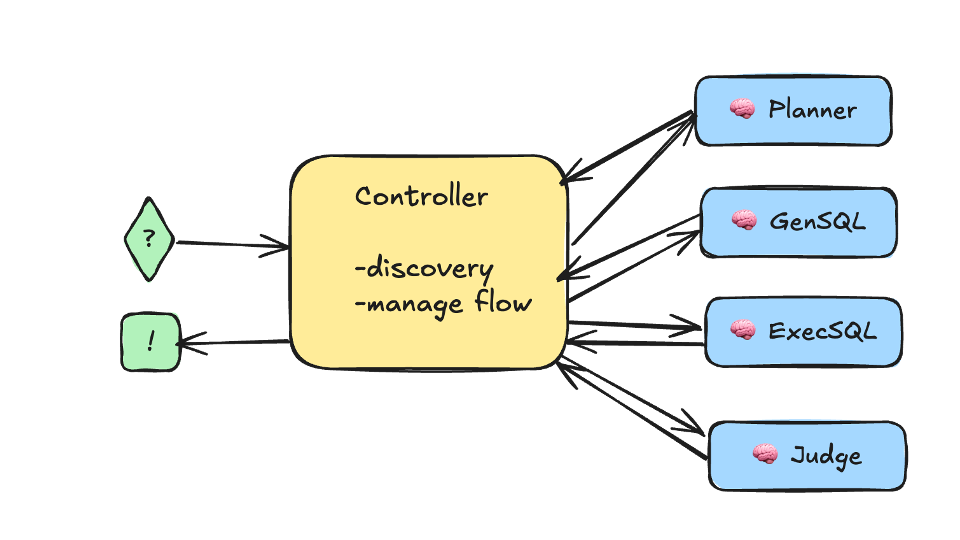

From a software program engineering perspective, the agentic program makes use of its AI fashions to interpret this enter and autonomously construct a plan of execution towards the specified aim. How it accomplishes that aim relies upon totally on the checklist of instruments it has entry to.

When our agent awakens, it’s going to first seek for the instruments beneath its /instruments listing. This listing could have guiding recordsdata to evaluate what’s inside its capabilities. For instance:

/instruments/checklist

/Planner

/GenSQL

/ExecSQL

/Choose

You may also take a look at it primarily based on this diagram:

Confluent

The principle agent receiving the immediate acts as a controller. The controller has discovery and administration capabilities and is accountable for speaking instantly with its instruments and different brokers. This works in 5 basic steps:

- The controller calls on the planning agent.

- The planning agent returns an execution plan.

- The choose evaluations the execution plan.

- The controller leverages GenSQL and ExecSQL to execute the plan.

- The choose evaluations the ultimate plan and supplies suggestions to find out if the plan must be revised and rerun.

As you’ll be able to think about, there are a number of occasions and messages between the controller and the remainder of the brokers. That is what we’ll confer with as AI agent communication.

Budding protocols for AI agent communication

A battle is raging within the trade over the appropriate method to standardize agent communication. How will we make it simpler for AI brokers to entry instruments or information, talk with different brokers, or course of human interactions?

Immediately, we’ve Mannequin Context Protocol (MCP), Agent2Agent (A2A) protocol, and Agent Communication Protocol (ACP). Let’s check out how these AI agent communication protocols work.

Mannequin Context Protocol

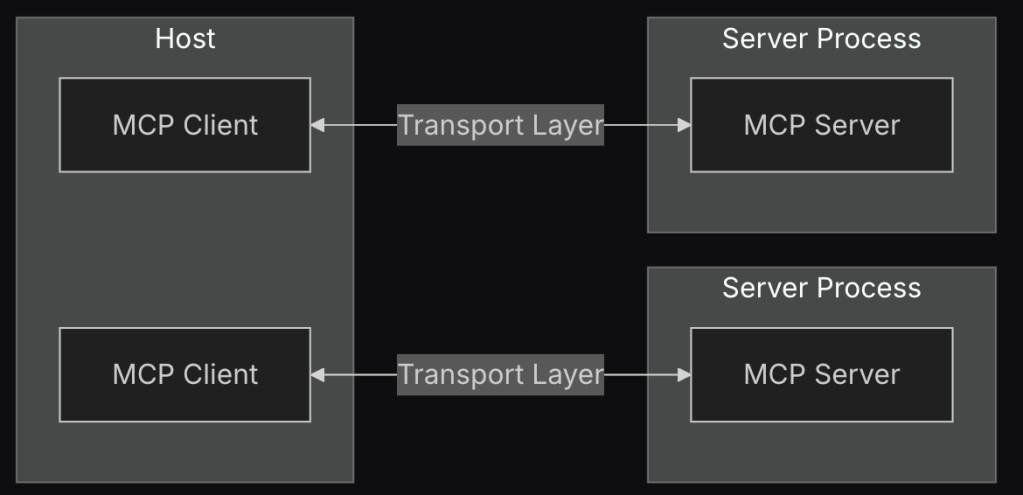

Mannequin Context Protocol (MCP), created by Anthropic, was designed to standardize how AI brokers and fashions handle, share, and make the most of context throughout duties, instruments, and multi-step reasoning. Its client-server structure treats the AI functions as shoppers that request data from the server, which supplies entry to exterior sources.

Let’s assume all the info is saved in Apache Kafka subjects. We will construct a devoted Kafka MCP server, and Claude, Anthropic’s AI mannequin, can act as our MCP shopper.

In this instance on GitHub, authored by Athavan Kanapuli, Akan asks Claude to connect with his Kafka dealer and checklist all of the subjects it incorporates. With MCP, Akan’s shopper utility doesn’t have to know easy methods to entry the Kafka dealer. Behind the scenes, his shopper sends the request to the server, which takes care of translating the request and operating the related Kafka operate.

In Akan’s case, there have been no out there subjects. The shopper then asks if Akan want to create a subject with a devoted variety of partitions and replication. Identical to with Akan’s first request, the shopper doesn’t require entry to data on easy methods to create or configure Kafka subjects and partitions. From right here, Akan asks the agent to create a “international locations” matter and later describe the Kafka matter.

For this to work, it’s essential outline what the server can do. In Athavan Kanapuli’s Akan mission, the code is within the handler.go file. This file holds the checklist of capabilities the server can deal with and execute on. Right here is the CreateTopic instance:

// CreateTopic creates a brand new Kafka matter

// Optionally available parameters that may be handed by way of FuncArgs are:

// - NumPartitions: variety of partitions for the subject

// - ReplicationFactor: replication issue for the subject

func (okay *KafkaHandler) CreateTopic(ctx context.Context, req Request) (*mcp_golang.ToolResponse, error) {

if err := ctx.Err(); err != nil {

return nil, err

}

if err := okay.Consumer.CreateTopic(req.Subject, req.NumPartitions, req.ReplicationFactor); err != nil {

return nil, err

}

return mcp_golang.NewToolResponse(mcp_golang.NewTextContent(fmt.Sprintf("Subject %s is created", req.Subject))), nil

}

Whereas this instance makes use of Apache Kafka, a extensively adopted open-source know-how, Anthropic generalizes the tactic and defines hosts. Hosts are the giant language mannequin (LLM) functions that provoke connections. Each host can have a number of shoppers, as described in Anthropic’s MCP structure diagram:

Anthropic

An MCP server for a database could have all of the database functionalities uncovered by an identical handler. Nonetheless, if you wish to turn into extra refined, you’ll be able to outline current immediate templates devoted to your service.

For instance, in a healthcare database, you could possibly have devoted capabilities for affected person well being information. This simplifies the expertise and supplies immediate guardrails to guard delicate and personal affected person data whereas guaranteeing correct outcomes. There’s rather more to be taught, and you may dive deeper into MCP right here.

Agent2Agent protocol

The Agent2Agent (A2A) protocol, invented by Google, permits AI brokers to speak, collaborate, and coordinate instantly with one another to unravel advanced duties with out frameworks or vendor lock-in. A2A is expounded to Google’s Agent Growth Equipment (ADK) however is a definite element and never a part of the ADK bundle.

A2A ends in opaque communication between agentic functions. Meaning interacting brokers don’t have to show or coordinate their inside structure or logic to alternate data. This offers totally different groups and organizations the liberty to construct and join brokers with out including new constraints.

In apply, A2A requires that brokers are described by metadata in identification recordsdata often known as agent playing cards. A2A shoppers ship requests as structured messages to A2A servers to eat, with real-time updates for long-running duties. You may discover the core ideas in Google’s A2A GitHub repo.

One helpful instance of A2A is this healthcare use case, the place a supplier’s brokers use the A2A protocol to speak with one other supplier in a special area. The brokers should guarantee information encryption, authorization (OAuth/JWT), and asynchronous switch of structured well being information with Kafka.

Once more, take a look at the A2A GitHub repo for those who’d wish to be taught extra.

Agent Communication Protocol

The Agent Communication Protocol (ACP), invented by IBM, is an open protocol for communication between AI brokers, functions, and people. In accordance with IBM:

In ACP, an agent is a software program service that communicates by multimodal messages, primarily pushed by pure language. The protocol is agnostic to how brokers operate internally, specifying solely the minimal assumptions vital for clean interoperability.

For those who check out the core ideas outlined within the ACP GitHub repo, you’ll discover that ACP and A2A are related. Each have been created to remove agent vendor lock-in, pace up improvement, and use metadata to make it simple to find community-built brokers whatever the implementation particulars. There’s one essential distinction: ACP permits communication for brokers by leveraging IBM’s BeeAI open-source framework, whereas A2A helps brokers from totally different frameworks talk.

Let’s take a deeper take a look at the BeeAI framework to know its dependencies. As of now, the BeeAI mission has three core elements:

- BeeAI platform – To find, run, and compose AI brokers;

- BeeAI framework – For constructing brokers in Python or TypeScript;

- Agent Communication Protocol – For agent-to-agent communication.

What’s subsequent in agentic AI?

At a excessive degree, every of those communication protocols tackles a barely totally different problem for constructing autonomous AI brokers:

- MCP from Anthropic connects brokers to instruments and information.

- A2A from Google standardizes agent-to-agent collaboration.

- ACP from IBM focuses on BeeAI agent collaboration.

For those who’re focused on seeing MCP in motion, take a look at this demo on querying Kafka subjects with pure language. Each Google and IBM launched their agent communication protocols solely just lately in response to Anthropic’s profitable MCP mission. I’m desperate to proceed this studying journey with you and see how their adoption and evolution progress.

Because the world of agentic AI continues to increase, I like to recommend that you just prioritize studying and adopting protocols, instruments, and approaches that prevent effort and time. The extra adaptable and sustainable your AI brokers are, the extra you’ll be able to give attention to refining them to unravel issues with real-world influence.

Adi Polak is director of advocacy and developer expertise engineering at Confluent.

—

Generative AI Insights supplies a venue for know-how leaders—together with distributors and different exterior contributors—to discover and talk about the challenges and alternatives of generative synthetic intelligence. The choice is wide-ranging, from know-how deep dives to case research to knowledgeable opinion, but in addition subjective, primarily based on our judgment of which subjects and coverings will greatest serve InfoWorld’s technically refined viewers. InfoWorld doesn’t settle for advertising collateral for publication and reserves the appropriate to edit all contributed content material. Contact doug_dineley@foundryco.com.